While useful for searchers, Google autocomplete has become a significant reputational risk to companies and individuals.

Negative Google autocomplete keywords displayed for your name or company can become the “first impression” of who you are.

This can be highly damaging if the suggested keywords displayed are inaccurate and include terms like scams, complaints, pyramid schemes, lawsuits or controversies.

With the rise of AI-generated search results, Google autocomplete keywords will become more visible – and potentially more damaging.

This article explores:

- How Google autocomplete keywords are derived.

- The reputational risks of Google autocomplete and Search Generative Experience (SGE).

- How to remove Google autocomplete keywords that are harmful, defamatory or inappropriate.

Google autocomplete: How does it predict searches?

Google autocomplete can shape a user’s perceptions before clicking “enter.”

As a user types a search term, Google autocomplete suggests words and phrases to complete the term.

This may cut down the searcher’s time and effort, but it can also shift them in an entirely different direction than what they were looking for in the first place.

Google observes several factors to determine what shows up in Google autocomplete results:

- Location: Google autocomplete results can be geolocated to where you are searching.

- Virality/trending topics: If there is a large surge in keyword searches around a specific event, person, product/service, or company, Google is more likely to pick up that keyword in Google autocomplete.

- Language: The language associated with a particular keyword search can influence the predictions that are displayed.

- Search volume: Consistent search volume around a specific keyword can trigger a keyword being added to Google autocomplete (even if that volume is relatively low).

- Search history: If you are logged in to your Google account, you’ll often see previous searches show up in Google autocomplete. This can be bypassed by searching in an incognito browser that does not take your search history into account.

- Keyword associations: If a keyword related to a brand, product, service or person is mentioned on a site Google deems trustworthy, an autocomplete keyword can be derived from one of these sources. Although this is not a confirmed factor by Google, our team has noted keyword associations as being a precursor to having a negative autocomplete result.

Google autocomplete: Shaping reputations before clicking enter

I’ve seen a growing number of negative, defamatory, and oftentimes inaccurate autocomplete results that display prominently in a Google search for a brand, product, service or individual.

The predictive functionality of Google autocomplete and AI search engines can sometimes lead to negative associations between a company or a person’s name.

A company or individual might find itself linked to rumors, scandals, lawsuits or controversies that it had no involvement in simply due to the algorithm’s unpredictable nature.

Such associations can severely impact your reputation, erode customer trust, and hinder a company’s ability to attract new business.

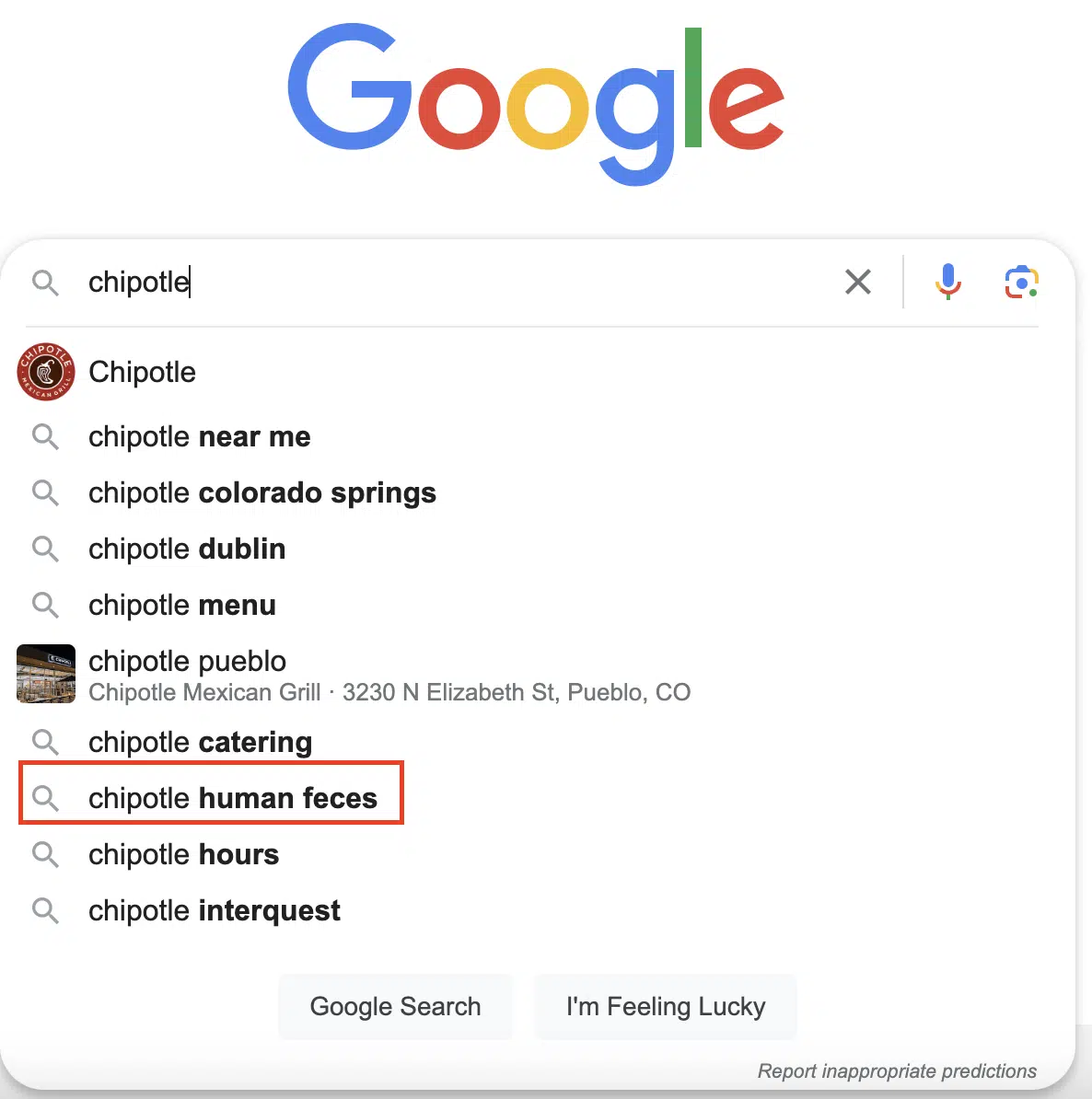

Let’s look at Chipotle, for example. A colleague recently noticed the autocomplete keyword “human feces” while he was searching for Chipotle:

The keyword “Chipotle” receives an average of 4 million searches monthly, per Semrush.

So, how many of those 4 million people saw that Google autocomplete keyword “human feces” and decided to go to Qdoba instead?

How many prospective investors in Chipotle stock saw this keyword and decided to take their money elsewhere?

Although it’s impossible to get exact answers to these questions, one thing is for sure: This negative keyword has the potential to cost Chipotle millions of dollars while simultaneously diminishing its brand value.

As of this writing, the inappropriate autocomplete search is not seen for the brand keyword (“Chipotle”). However, it is still displayed for various long-tail branded keywords and will likely continue fluctuating until the issue is addressed.

Get the daily newsletter search marketers rely on.

Amplification of misinformation and discrimination

Google autocomplete can inadvertently amplify false or misleading information by suggesting related queries or displaying incorrect snippets.

For instance, if a company or individual has been unfairly targeted by false rumors or negative publicity, Google may perpetuate these inaccuracies by suggesting them to users.

This can lead to a widespread acceptance of misinformation, causing lasting reputational damage.

We have worked with many individuals who have dealt with discriminatory keywords in Google autocomplete search results (gay, transgender, etc.), which can cause privacy, safety and reputation issues.

This type of content should never be displayed in Google autocomplete, but the algorithmic nature of the predictions can cause such keywords to be displayed.

How AI might impact Google autocomplete

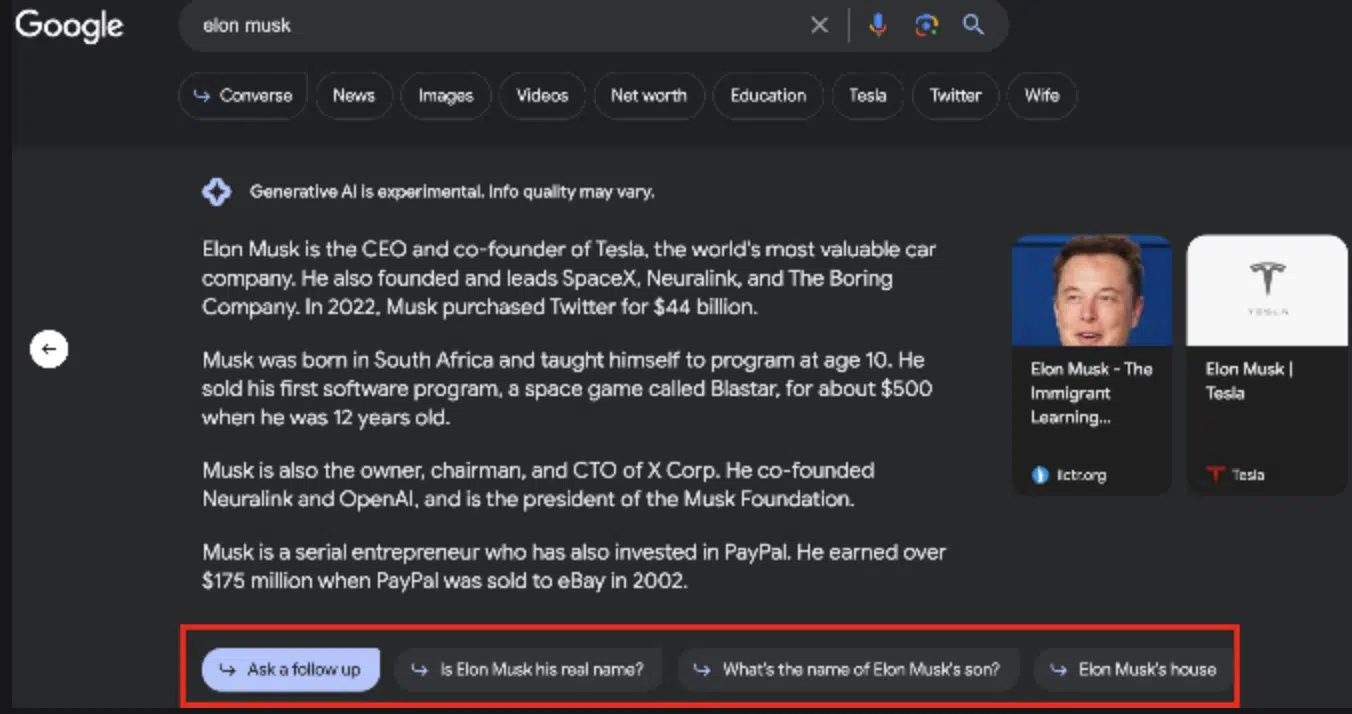

In Google’ SGE (beta), users are displayed AI-generated answers directly above the organic search listings.

While these listings will be clearly labeled as “generated by AI,” they will stand out among the other answers simply because they’ll appear first.

This could lead users to trust these results more, even though they might not be as reliable as the other results on the list.

For a generative AI Google search, the autocomplete terms will now be featured in “bubbles” rather than in the traditional list you see in standard search results.

We have noticed that there is a direct correlation between the autocomplete “bubbles” that are shown and the “traditional” autocomplete terms:

What does Google say about harmful and negative autocomplete keywords?

Google admits that its autocomplete predictions aren’t perfect. As they state on their support page:

Google has the following policies to deal with these issues:

- Autocomplete has systems designed to prevent potentially unhelpful and policy-violating predictions from appearing. These systems try to identify predictions that are violent, sexually explicit, hateful, disparaging, or dangerous or that lead to such content. This includes predictions that are unlikely to return much reliable content, such as unconfirmed rumors after a news event.

- If the automated systems miss problematic predictions, our enforcement teams remove those that violate our policies. In these cases, we remove the specific prediction in question and closely related variations.

How to remove Google autocomplete keywords

If you or your company encounter a negative, false, or defamatory autocomplete keyword that violates Google’s policies, follow the steps below to “report the inappropriate prediction.”

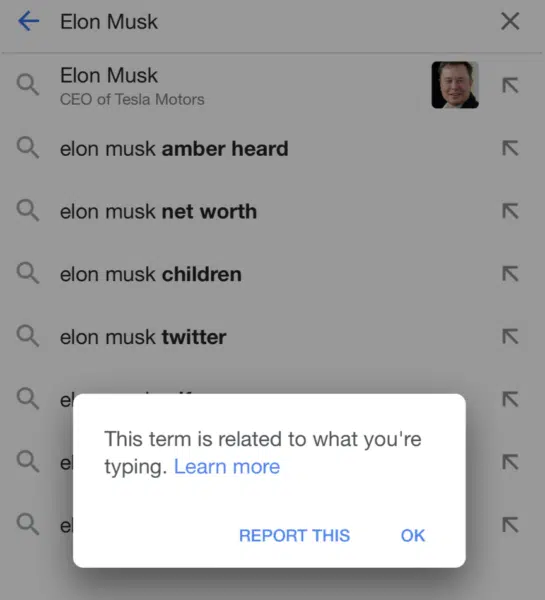

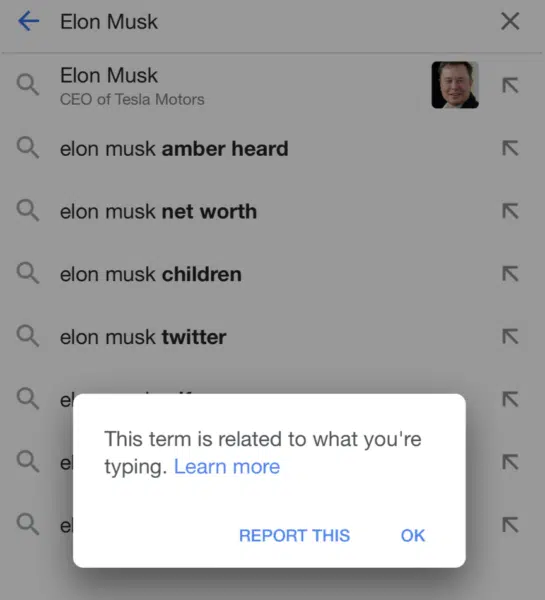

If you are on mobile, long press on the inappropriate prediction for a pop-up to display:

If there is a legal issue associated with the Google autocomplete prediction, you can request the removal of the content you think is unlawful at this link. You will need to choose which option applies to your situation.

Managing the reputational risks of Google autocomplete

The power of Google autocomplete and AI search engine results cannot be underestimated.

The seemingly innocuous feature of Google autocomplete predictions holds the potential to shape perceptions, influence decisions and even damage reputations.

The reputational risks posed by negative autocomplete keywords, whether inaccurate, defamatory or discriminatory, are substantial and far-reaching.

The evolving landscape, with the integration of generative AI search engine results, further underscores the need for vigilant management of autocomplete keywords.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.