Google has announced new markup aimed at news publishers looking to get their content into the Google Assistant. This new markup, named speakable, enables publishers to mark up sections of a news article that are most relevant to be read aloud by the Google Assistant, including devices like Google Home.

The features are now available for English language users in the US. Google said it hopes to launch the capability in other languages and countries “as soon as a sufficient number of publishers have implemented speakable.”

Google currently lists this markup as a “BETA,” with the disclaimer that it is “subject to change.” “We are currently developing this feature and you may see changes in requirements or guidelines,” Google added.

The specification is now on Schema.org, which notes that speakable markup:

Here are the technical guidelines:

- Don’t add speakable structured data to content that may sound confusing in voice-only and voice-forward situations, like datelines (location where the story was reported), photo captions, or source attributions.

- Rather than highlighting an entire article with speakable structured data, focus on key points. This allows listeners to get an idea of the story and not have the TTS readout cut off important details.

Here are the content guidelines:

- Content indicated by speakable structured data should have concise headlines and/or summaries that provide users with comprehensible and useful information.

- If you include the top of the story in speakable structured data, we suggest that you rewrite the top of the story to break up information into individual sentences so that it reads more clearly for TTS.

- For optimal audio user experiences, we recommend around 20-30 seconds of content per section of speakable structured data, or roughly two to three sentences.

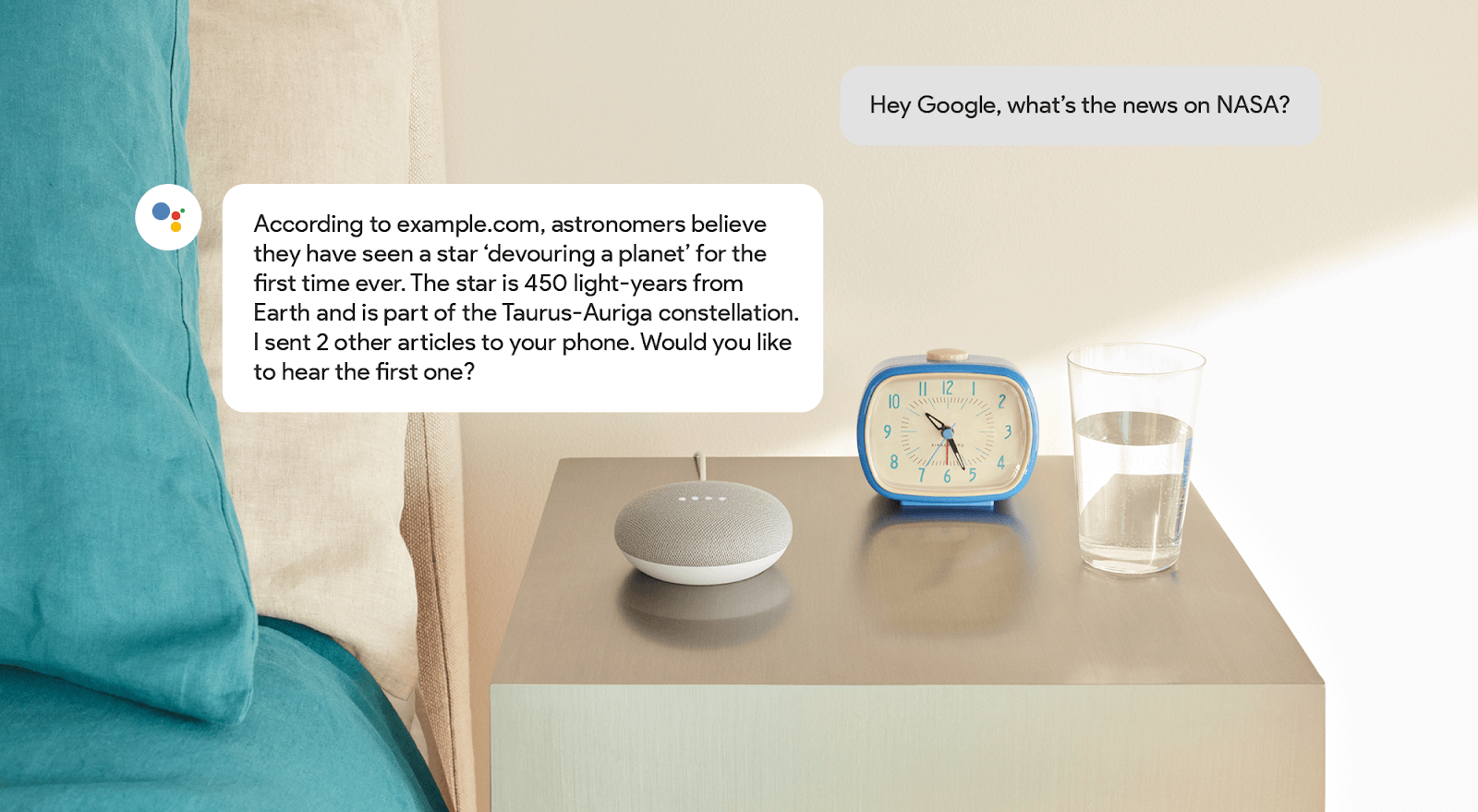

Google’s example of how this might work with a Google Home device is shown above, but here is what Google said:

To learn more, see the technical documentation.