In Part One of our three-part series, we learned what bots are and why crawl budgets are important. Let’s take a look at how to let the search engines know what’s important and some common coding issues.

In Part One of our three-part series, we learned what bots are and why crawl budgets are important. Let’s take a look at how to let the search engines know what’s important and some common coding issues.

How to let search engines know what’s important

When a bot crawls your site, there are a number of cues that direct it through your files.

Like humans, bots follow links to get a sense of the information on your site. But they’re also looking through your code and directories for specific files, tags and elements. Let’s take a look at a number of these elements.

Robots.txt

The first thing a bot will look for on your site is your robots.txt file.

For complex sites, a robots.txt file is essential. For smaller sites with just a handful of pages, a robots.txt file may not be necessary — without it, search engine bots will simply crawl everything on your site.

There are two main ways you can guide bots using your robots.txt file.

1. First, you can use the “disallow” directive. This will instruct bots to ignore specific uniform resource locators (URLs), files, file extensions, or even whole sections of your site:

User-agent: Googlebot

Disallow: /example/

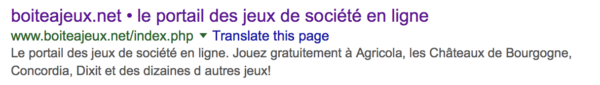

Although the disallow directive will stop bots from crawling particular parts of your site (therefore saving on crawl budget), it will not necessarily stop pages from being indexed and showing up in search results, such as can be seen here:

The cryptic and unhelpful “no information is available for this page” message is not something that you’ll want to see in your search listings.

The above example came about because of this disallow directive in census.gov/robots.txt:

User-agent: Googlebot

Crawl-delay: 3

Disallow: /cgi-bin/

2. Another way is to use the noindex directive. Noindexing a certain page or file will not stop it from being crawled, however, it will stop it from being indexed (or remove it from the index). This robots.txt directive is unofficially supported by Google, and is not supported at all by Bing (so be sure to have a User-agent: * set of disallows for Bingbot and other bots other than Googlebot):

User-agent: Googlebot

Noindex: /example/

User-agent: *

Disallow: /example/

Obviously, since these pages are still being crawled, they will still use up your crawl budget.

This is a gotcha that is often missed: the disallow directive will actually undo the work of a meta robots noindex tag. This is because the disallow prevents the bots from accessing the page’s content, and thus from seeing and obeying the meta tags.

Another caveat with using a robots.txt file to herd bots is that not all bots are well-behaved, and some will even ignore your directives (especially malicious bots looking for vulnerabilities). For a more detailed overview of this, check out A Deeper Look at Robots.txt.

XML sitemaps

XML sitemaps help bots understand the underlying structure of your site. It’s important to note that bots use your sitemap as a clue, not a definitive guide, on how to index your site. Bots also consider other factors (such as your internal linking structure) to figure out what your site is about.

The most important thing with your eXtensible markup language (XML) sitemap is to make sure the message you’re sending to search engines is consistent with your robots.txt file.

Don’t send bots to a page you’ve blocked them from; consider your crawl budget, especially if you decide to use an automatically generated sitemap. You don’t want to accidentally give the crawlers thousands of pages of thin content to sort through. If you do, they might never reach your most important pages.

The second most important thing is to ensure your XML sitemaps only include canonical URLs, because Google looks at your XML sitemaps as a canonicalization signal.

Canonicalization

If you have duplicate content on your site (which you shouldn’t), then the rel=“canonical” link element tells bots which URL should be considered the master version.

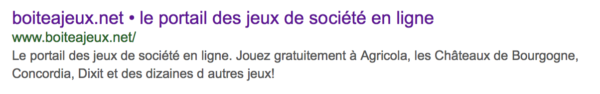

One key place to look out for this is your home page. Many people don’t realize their site might house multiple copies of the same page at differing URLs. If a search engine tries to index these pages, there is a risk that they will trip the duplicate content filter, or at the very least dilute your link equity. Note that adding the canonical link element will not stop bots from crawling the duplicate pages. Here’s an example of such a home page indexed numerous times by Google:

Pagination

Setting up rel=”next” and rel=”prev” link elements correctly is tricky, and many people struggle to get it right. If you’re running an e-commerce site with a great many products per category, rel=next and rel=prev are essential if you want to avoid getting caught up in Google’s duplicate content filter.

Imagine that you have a site selling snowboards. Say that you have 50 different models available. On the main category page, users can view the first 10 products, with a product name and a thumbnail for each. They can then click to page two to see the next 10 results and so on.

Each of these pages would have the same or very similar titles, meta descriptions and page content, so the main category page should have a rel=”next” (no rel=”prev” since it’s the first page) in the head portion of the hypertext markup language (HTML). Adding the rel=”next” and rel=”prev” link element to each subsequent page tells the crawler that you want to use these pages as a sequence.

Alternatively, if you have a “view all” page, you could canonicalize to that “view all” page on all the pagination pages and skip the rel=prev/next altogether. The downside of that is that the “view all” page is what is probably going to be showing up in the search results. If the page takes too long to load, your bounce rate with search visitors will be high, and that’s not a good thing.

Without rel=”canonical,” rel=”next” and rel=”prev” link elements, these pages will be competing with each other for rankings, and you risk a duplicate content filter. Correctly implemented, rel=prev/next will instruct Google to treat the sequence as one page, or rel=canonical will assign all value to the “view all” page.

Common coding issues

Good, clean code is important if you want organic rankings. Unfortunately, small mistakes can confuse crawlers and lead to serious handicaps in search results.

Here are a few basic ones to look out for:

1. Infinite spaces (aka spider traps). Poor coding can sometimes unintentionally result in “infinite spaces” or “spider traps.” Issues like endless URLs pointing to the same content, or pages with the same information presented in a number of ways (e.g., dozens of ways to sort a list of products), or calendars that contain an infinity of different dates, can cause the spider to get stuck in a loop that can quickly exhaust your crawl budget.

Mistakenly serving up a 200 status code in your hypertext transfer protocol secure (HTTP) header of 404 error pages is another way to present to bots a website that has no finite boundaries. Relying on Googlebot to correctly determine all the “soft 404s” is a dangerous game to play with your crawl budget.

When a bot hits large amounts of thin or duplicate content, it will eventually give up, which can mean it never gets to your best content, and you wind up with a stack of useless pages in the index.

Finding spider traps can sometimes be difficult, but using the aforementioned log analyzers or a third-party crawler like Deep Crawl is a good place to start.

What you’re looking for are bot visits that shouldn’t be happening, URLs that shouldn’t exist or substrings that don’t make any sense. Another clue may be URLs with infinitely repeating elements, like:

example.com/shop/shop/shop/shop/shop/shop/shop/shop/shop/…

2. Embedded content. If you want your site crawled effectively, it’s best to keep things simple. Bots often have trouble with JavaScript, frames, Flash and asynchronous JavaScript and XML (AJAX). Even though Google is getting better at crawling formats like Javascript and AJAX, it’s safest to stick to old-fashioned HTML where you can.

One common example of this is sites that use infinite scroll. While it might improve your usability, it can make it difficult for search engines to properly crawl and index your content. Ensure that each of your article or product pages has a unique URL and is connected via a traditional linking structure, even if it is presented in a scrolling format.

In the next and final installment of this series, we’ll look at how bots are looking at your mobile pages, discuss if you should block bad bots, and dive into localization and hreflang tags. Stay tuned!

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.