Success in search engine optimization (SEO) requires not only an understanding of where Google’s algorithm is today but an insight to where Google is heading in the future.

Success in search engine optimization (SEO) requires not only an understanding of where Google’s algorithm is today but an insight to where Google is heading in the future.

Based on my experience, it has become clear to me Google will place a stronger weight on the customer’s experience with page load speed as part of their mobile-first strategy. With the investment Google has made in page performance, there are some indicators we need in order to understand how critical this factor is now and will be in the future. For example:

- AMP — Specifically designed to bring more information into the search engine results pages (SERPs) in a way that delivers on the customer’s intent most expeditiously. Google’s desire to quickly serve the customer “blazing-fast page rendering and content delivery” across devices and media begins with Google caching more content in their own cloud.

- Google Fiber — A faster internet connection for a faster web. A faster web allows for a stronger internet presence in our everyday lives and is the basis of the success of the internet of things (IoT). What the internet is today is driven by content and experience delivery. When fiber installations reach critical mass and gigabit becomes the standard, the internet will begin to reach its full potential.

- Google Developer Guidelines — 200-millisecond response time and a one-second top of fold page load time, more than a subtle hint that speed should be a primary goal for every webmaster.

Now that we are aware page performance is very important to Google, how do we as digital marketing professionals work speed and performance into our everyday SEO routine?

A first step would be to build the data source. SEO is a data-driven marketing channel, and performance data is no different from positions, click-through rates (CTRs) and impressions. We collect the data, analyze, and determine the course of action required to move the metrics in the direction of our choosing.

Tools to use

With page performance tools it is important to remember a tool may be inaccurate with a single measurement. I prefer to use at least three tools for gathering general performance metrics so I can triangulate the data and validate each individual source against the other two.

Data is only useful when the data is reliable. Depending on the website I am working on, I may have access to page performance data on a recurring basis. Some tool solutions like DynaTrace, Quantum Metric, Foglight, IBM and TeaLeaf collect data in real time but come with a high price tag or limited licenses. When cost is a consideration, I rely more heavily on the following tools:

- Google Page Speed Insights — Regardless of what tools you have access to, how Google perceives the performance of a page is really what matters.

- Pingdom.com — A solid tool for gathering baseline metrics and recommendations for improvement. The added capability to test using international servers is key when international traffic is a strong driver for the business you are working on.

- GTMetrix.com — Similar to Pingdom, with the added benefit of being able to play back the user experience timeline in a video medium.

- WebPageTest.org — A bit rougher user interface (UI) design, but you can capture all the critical metrics. Great for validating the data obtained from other tools.

Use multiple tools to capitalize on specific benefits of each tool, look to see if the data from all sources tells the same story. When the data is not telling the same story, there are deeper issues that should be resolved before performance data can be actionable.

Sampling approach

While it is more than feasible to analyze a single universal resource locator (URL) you are working on, if you want to drive changes in the metrics, you need to be able to tell the entire story.

I always recommend using a sampling approach. If you are working on an e-commerce site, for example, and your URL focus is a specific product detail page, gather metrics about the specific URL, and then do a 10-product detail page sample to produce an average. There may be a story unique to the single URL, or the story may be at the page level.

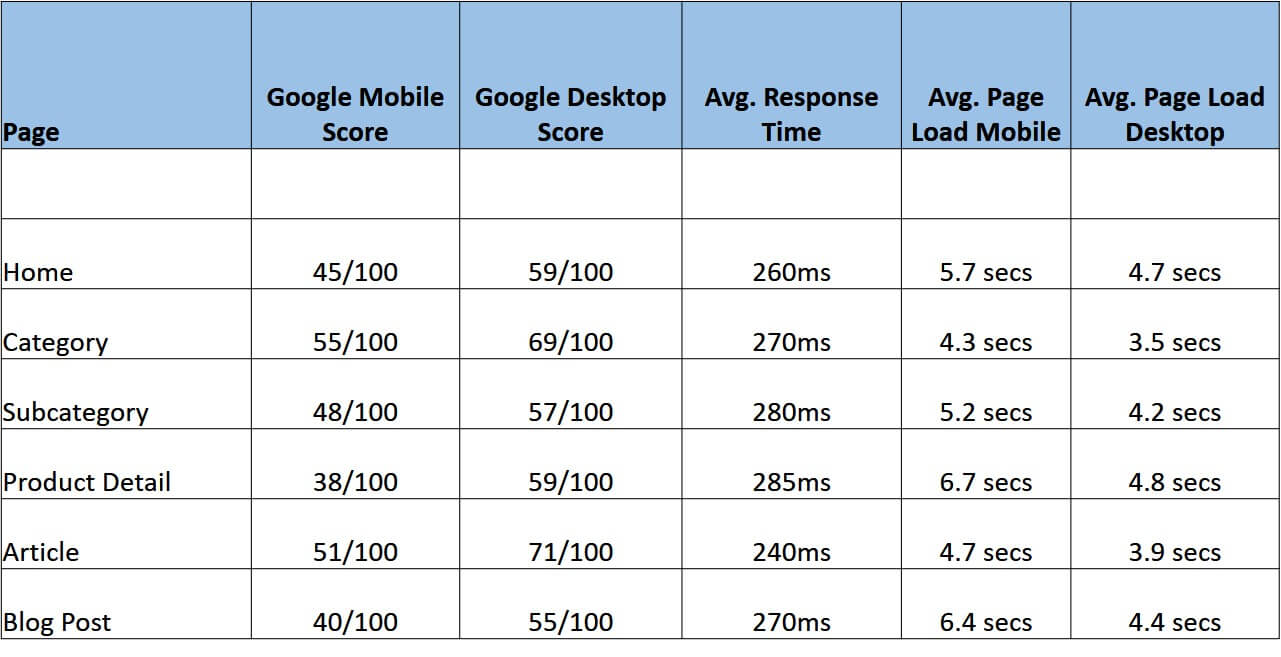

Below is an example of a capture of a 10-page average across multiple page types using Google Page Speed Insights as the source.

Evaluating this data, we can see all page types are exceeding a four-second load time. Our initial target is to bring these pages into a sub-four-second page load time, 200 milliseconds or better on response and a one-second above-the-fold (ATF) load time.

Using the data provided, you can do a deeper dive into source code, infrastructure, architecture design and networking to determine exactly what improvements are necessary to bring the metrics into the aligned goals. Partnering with the information technology (IT) to establish service level agreements (SLAs) for load time metrics will ensure improvements are an ongoing objective of the company. Without the right SLAs in place, IT may not maintain the metrics you need for SEO.

Using Pingdom, we can dive a bit further into what is driving the slower page loads. The waterfall diagram demonstrates how much time each page element requires to load.

Keep in mind that objects will load in parallel, so a single slow-loading object may slow ATF load but may not impact the overall page load time.

Review the waterfall diagram to find elements that are consuming excessive load time. You can change the sort and file size to identify any objects that are of excessive size.

A common issue is the use of third-party hosted fonts and or images that have not been optimized for the web. Fonts are loaded above the fold, and if there are delays in response from a third-party font provider, it can bring the page load to a crawl.

When working with designers and front-end developers, ask if they evaluate web-safe fonts for their design. If web-safe fonts do not work with the design, consider Google fonts or Adobe Typekit.

Evaluate by file type

You can also evaluate the page weight by file type to determine if there are excessive scripts or style sheets called on the page. Once you have identified the elements that require further investigation, perform a view source on the page in your browser and see where the elements load in the page. Look closely for excessive style sheets, fonts and/or JavaScript loading in the HEAD section of the document. The HEAD section must execute before the BODY. If unnecessary calls exist in the HEAD, it is highly unlikely you will be able to achieve the one-second above-the-fold target.

Work with your front-end developers to ensure that all JavaScript is set to load asynchronously. Loading asynchronously allows other scripts to execute without waiting for the prior script call to complete. JavaScript calls that are not required for every page or are not required to execute in the HEAD of the document is a common issue you find in platforms like Magento, Shopify, NetSuite, Demandware and BigCommerce, primarily due to add-on modules or extensions. Work with your developers to evaluate each script call for dependencies in the page and whether the execution of the script can be deferred.

Cleaning up the code in the HEAD of your webpages and investigating excessive file sizes are key to achieving a one-second above-the-fold load time. If the code appears to be clean, but the page load times are still excessive, evaluate response time. Response timing above 200 milliseconds exceeds Google’s threshold. Tools such as Pingdom can identify response-time issues related to domain name system (DNS) and/or excessive document size, as well as network connectivity issues. Gather your information, partner with your IT team and place a focus on a fast-loading customer experience.

Google’s algorithm will continue to evolve, and SEO professionals who focus on website experience, from page load times to fulfilling on the customer’s intent, are working ahead of the algorithm.

Working ahead of the algorithm allows us to toast a new algorithm update instead of scrambling to determine potential negative impact. Improving the customer experience through SEO-driven initiatives demonstrates how a mature SEO program can drive positive impact regardless of traffic source.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.