Voice search is all the buzz out there, with some saying that by 2020, 50 percent of all queries could be by either image or voice. Personally, I think that estimate is aggressive, but the fact is that it’s a rapidly growing segment.

It’s also important to be aware that “voice search” may not be the correct label. I say this because many of the “queries” are really commands, like “call mom.” That is not something you would ever have entered into a Google search box.

Nonetheless, there are big opportunities for those who engage in voice early. Learning about conversational interfaces is hard work, and it will take practice and experience.

For that reason, I looked forward to seeing the Optimizing For Voice Search & Virtual Assistants panel at SMX Advanced, and today I’ll provide a recap of what the three speakers shared.

Upasana Gautam, Ziff Davis

First up was Upasana Gautam (aka Pas). Her focus was on how Google measures the quality of speech recognition as documented in this Google white paper.

Pas went over the five major metrics of quality discussed in the paper:

- Word error rate (WER).

- Semantic Quality (Webscore).

- Perplexity (PPL).

- Out-of-Vocabulary Rate (OOV).

- Latency.

Next, she went into the quality metrics in detail.

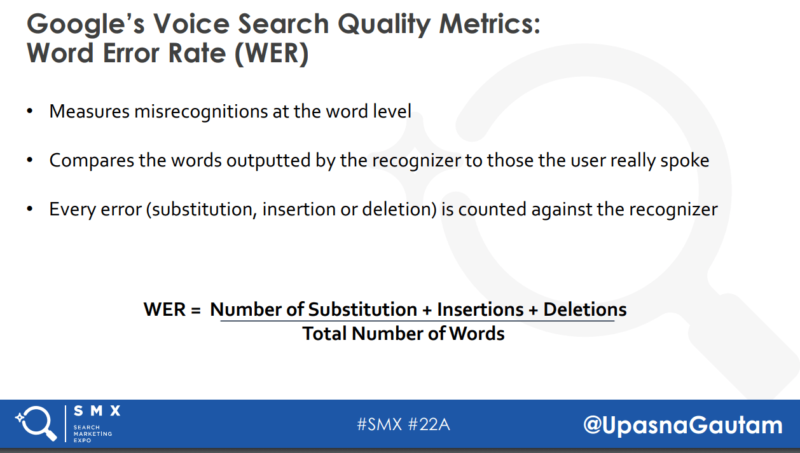

Word Error Rate (WER)

This metric, which measures misrecognition at the word level, is calculated as follows:

WER is not necessarily a great measure of what will impact the final search results, so while this is measured, it’s secondary to some of the other metrics below.

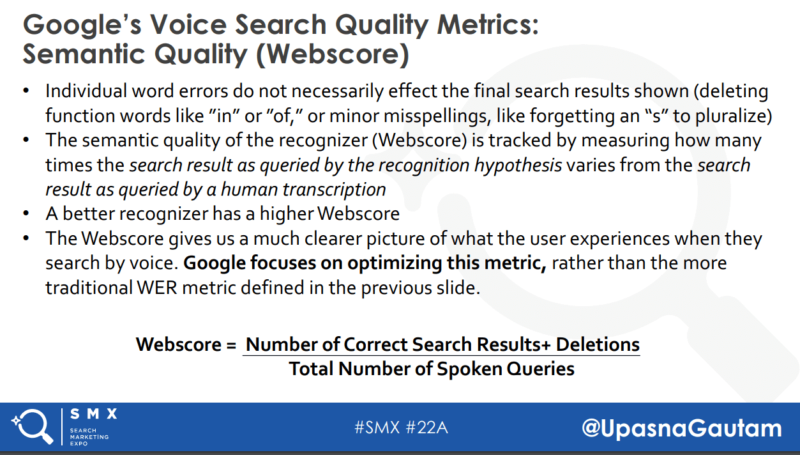

Webscore

Webscore tracks the semantic quality of the recognizer. Higher levels of recognition result in higher Webscores. Temporal relationships and semantic relationships are what WebScore is all about, and Google focuses significant energy on optimizing this metric. It is calculated as follows:

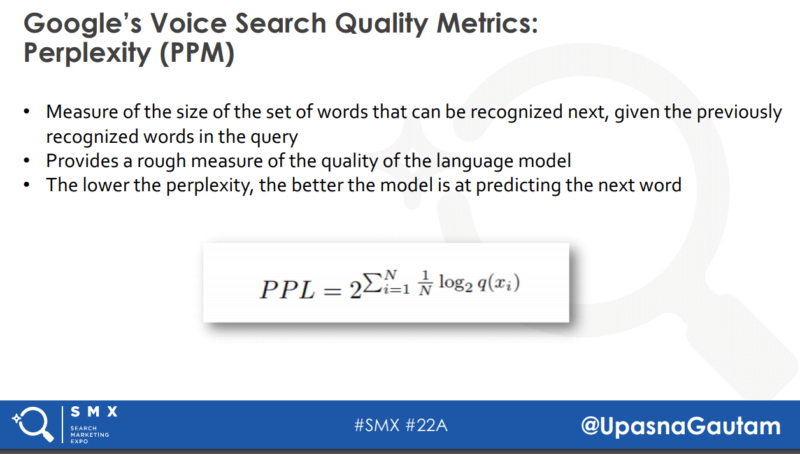

Perplexity

This is a measure of the size of the set of words which can be recognized given the previously recognized words in the query. It serves as a rough measure of the quality of a language model. The lower the Perplexity score, the better. It is calculated as follows:

Out of Vocabulary Rate

This metric tracks the number of words not in the language model, and it’s important to keep this number as low as possible, as it will ultimately result in recognition errors. Errors of this type may also cause errors in surrounding words due to subsequent bad predictions of the language model and acoustic misalignments.

Latency

This is the total time it takes to complete a search by voice. The contributing factors are:

- The time it takes to detect the end of a speech.

- Time to recognize the spoken query.

- Time to perform the web query.

- Time to return back to the client.

- Time to render the result in the browser.

If you have an interest in developing voice assistant solutions, understanding this model is useful because it allows us to better tune our own language model in our conversational interface. One of the things that I’ve learned in the voice assistants we’ve developed is that picking simpler activation phrases can improve overall results for our actions or skills.

Pas SMXInsights

Katie Pennell, Nina Hale

Katie shared some data from a Backlinko study of 10,000 Google Home search results and a BrightLocal study of voice search for local results:

- 70 percent of Google Home results cited a website source (Backlinko).

- 41 percent of voice search results came from featured snippets (Backlinko).

- 76 percent of smart speaker users perform local searches at least weekly (BrightLocal).

The theme of her presentation, reinforced throughout her talk, was that not all topics work great for voice search.

For example, with entity searches the data will get pulled from the knowledge graph, and your brand won’t get visibility for knowledge graph sourced results.

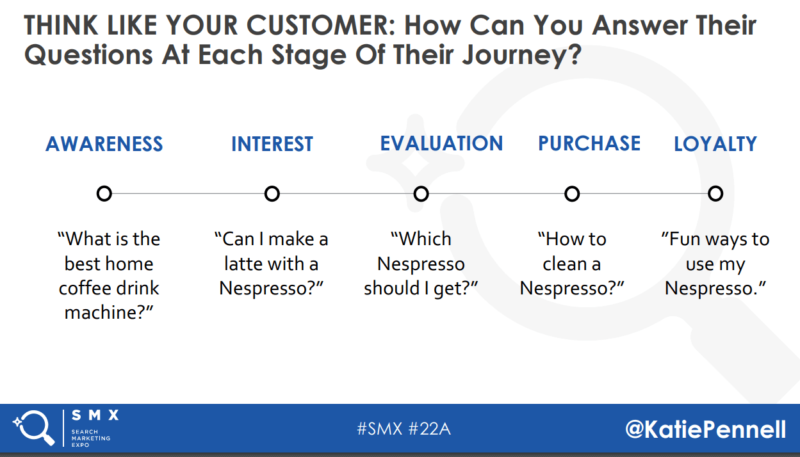

Web pages hosting your-money-or-your-life (YMYL) type content stand less a chance of being offered up as a voice result. As you work on deciding what content to target for voice search, you should carefully consider the overall customer journey:

You can get useful ideas and trends from many different sources:

- People Also Ask result shown by Google.

- Keywords Everywhere Chrome plug-in.

- Answer the Public.

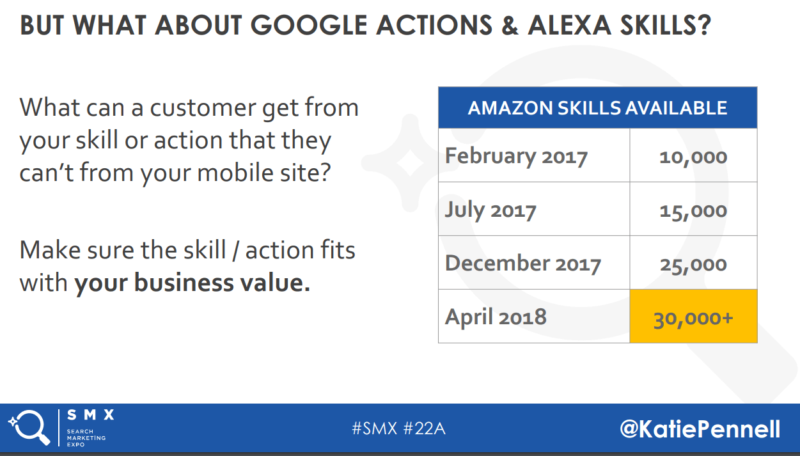

From there you can figure out which topics will be best for voice optimization. You can also consider developing your own Actions on Google app or Alexa Skill. Be careful, though, as there are lots of people already doing these things.

You can see from Katie’s chart that the market is crowded. Make sure you develop something that is useful enough for people to want to engage with it.

In addition, make sure the Google Actions and Alexa Skills fits in with your business value. To do that you can leverage:

- Keyword research.

- Social listening.

- Internal site search.

- User research.

- Customer service team.

Katie’s SMXInsights:

Benu Aggarwal, Milestone

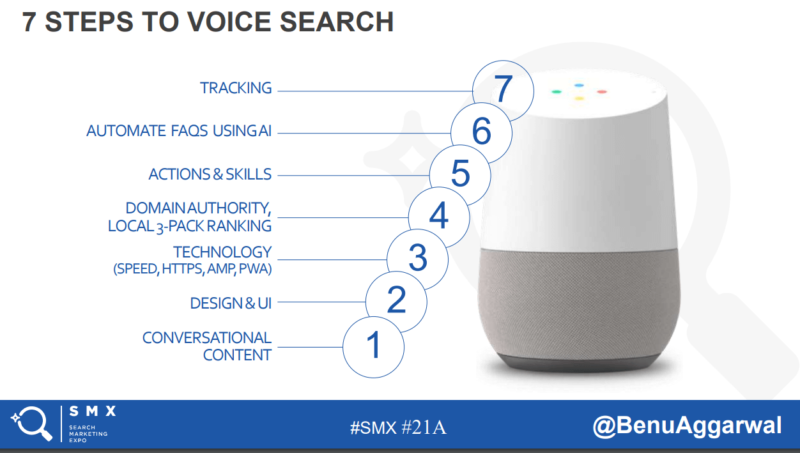

Benu started her presentation by discussing a seven-step process for voice search:

She also shared interesting data on how people use voice search:

One of the big factors in voice search is that when you get a spoken reply from a device, it typically comes as a solitary answer. If you’re not the source of that answer, then you’ve been left out of the opportunity for that exposure.

Benu also discussed the importance of offering conversational content across each stage of the customer journey and shared an example in the travel industry.

She and her team looked at the rankings for a client on queries like “hotel near me” and saw the client ranking at position 1.5 and at position 6.7 on desktop. This serves as a reminder that you need to check your rankings on a mobile device when selecting candidates for which you might be able to get a featured snippet. You stand a better chance to be the response to spoken voice queries if you do.

Featured snippets are generally seen for informational queries and are more often shown for long-tail queries. The questions they answer frequently start with why, what, how, when or who.

You should also seek to create content for every stage of the journey, but 80 percent of that content should be informational in nature, as these are what feed most featured snippets.

Here are some thoughts on different content types to consider:

- Informational intent (guides, how-tos, etc.).

- Navigational intent (store locations, services, press releases, customer service info).

- Transactional intent (videos, product information, comparisons, product stories).

Additionally, here are some content tips:

- Satisfaction. Does the content meet user needs?

- Length. Relevant fragment from a long answer.

- Formulation. Grammatical correctness.

- Elocution. Proper pronunciation.

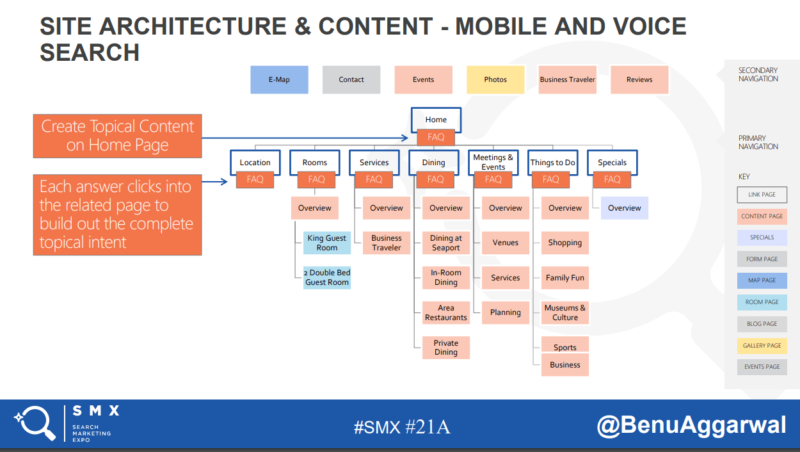

Benu also proposed a site architecture chart for a voice-optimized site as follows:

Benu’s concept is to integrate FAQs across every major page of your site. In addition, you need to condition your organization to buy into this approach. That includes developers, designers, content creators and more.

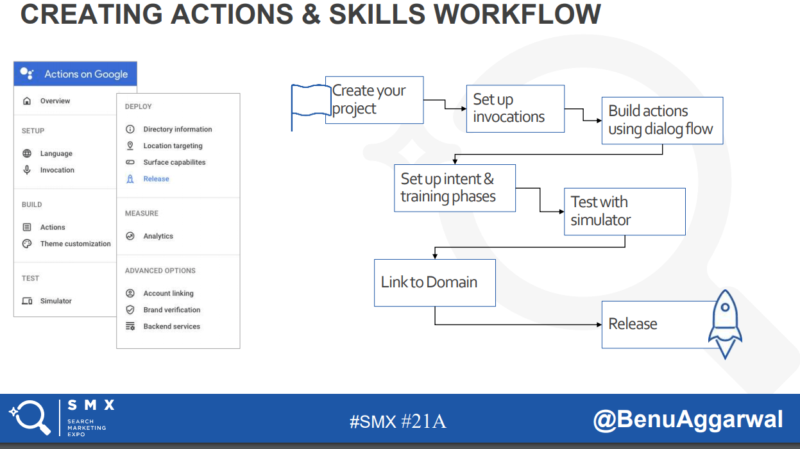

She also spoke about the process for creating Actions and Skills and included a flow chart for the process:

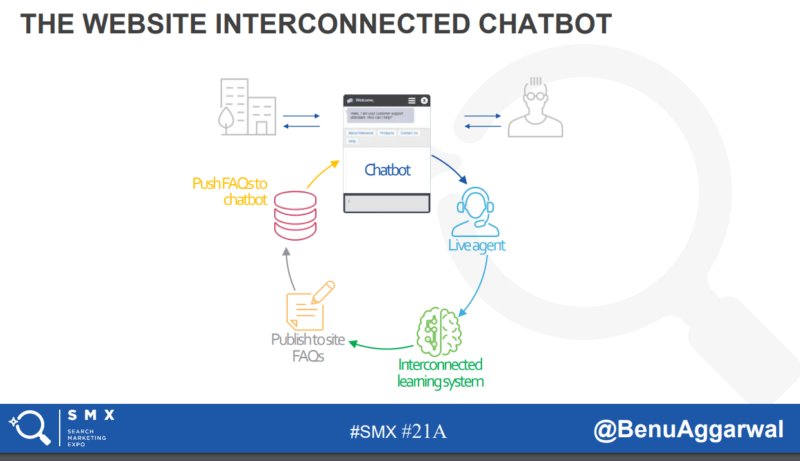

Benu is also an advocate for using multiple ways to connect and chat with customers, as captured in this chart:

Summary

Lots of great perspectives and info were shared. As you begin your own journey into understanding voice, be prepared to experiment, and be prepared to make some mistakes.

It’s all OK. The opportunity to get a leg up in this emerging new space is real, and if you approach it with an open mind and a willingness to experiment, you can learn a lot and begin building mindshare with your target audience before your competition does.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.